Author: Christian Timmerer, christian.timmerer@aau.at

Affiliation: Alpen-Adria-Universität (AAU) Klagenfurt, Austria & Bitmovin Inc.

Web site: http://timmerer.com

The 153rd MPEG meeting took place online from January 19-23, 2026. The official MPEG press release can be found here. This report highlights key outcomes from the meeting, with a focus on research directions relevant to the ACM SIGMM community:

- MPEG Roadmap

- Exploration on MPEG Gaussian Splat Coding (GSC)

- MPEG Immersive Video 2nd edition (new white paper)

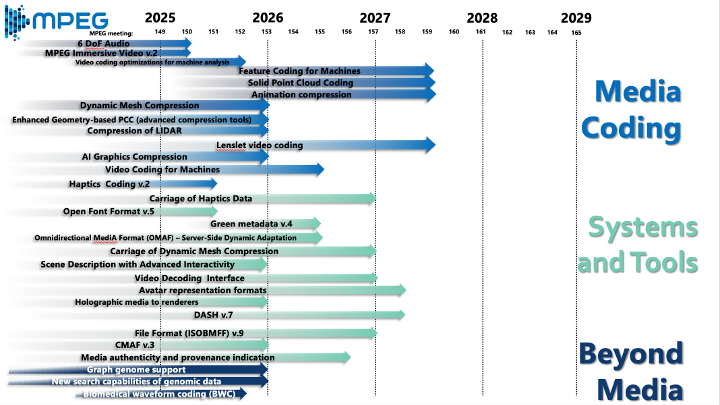

MPEG Roadmap

MPEG released an updated roadmap showing continued convergence of immersive and “beyond video” media with deployment-ready systems work. Near-term priorities include 6DoF experiences (MPEG Immersive Video v2 and 6DoF audio), volumetric representations (dynamic meshes, solid point clouds, LiDAR, and emerging Gaussian splat coding), and “coding for machines,” which treats visual and audio signals as inputs to downstream analytics rather than only for human consumption.

Research aspects: The most promising research opportunities sit at the intersections: renderer and device-aware rate-distortion-complexity optimization for volumetric content; adaptive streaming and packaging evolution (e.g., MPEG-DASH / CMAF) for interactive 6DoF services under tight latency constraints; and cross-cutting themes such as media authenticity and provenance, green and energy metadata, and exploration threads on neural-network-based compression and compression of neural networks that foreshadow AI-native multimedia pipelines.

MPEG Gaussian Splat Coding (GSC)

Gaussian Splat Coding (GSC) is MPEG’s effort to standardize how 3D Gaussian Splatting content, scenes represented as sparse “Gaussian splats” with geometry plus rich attributes (scale and rotation, opacity, and spherical-harmonics appearance for view-dependent rendering), is encoded, decoded, and evaluated so it can be exchanged and rendered consistently across platforms. The main motivation is interoperability for immersive media pipelines: enabling reproducible results, shared benchmarks, and comparable rate-distortion-complexity trade-offs for use cases spanning telepresence and immersive replay to mobile XR and digital twins, while retaining the visual strengths that made 3DGS attractive compared to heavier neural scene representations.

The work remains in an exploration phase, coordinated across ISO/IEC JTC 1/SC 29 groups WG 4 (MPEG Video Coding) and WG 7 (MPEG Coding for 3D Graphics and Haptics) through Joint Exploration Experiments covering datasets and anchors, new coding tools, software (renderer and metrics), and Common Test Conditions (CTC). A notable systems thread is “lightweight GSC” for resource-constrained devices (single-frame, low-latency tracks using geometry-based and video-based pipelines with explicit time and memory targets), alongside an “early deployment” path via amendments to existing MPEG point-cloud codecs to more natively carry Gaussian-splat parameters. In parallel, MPEG is testing whether splat-specific tools can outperform straightforward mappings in quality, bitrate, and compute for real-time and streaming-centric scenarios.

Research aspects: Relevant SIGMM directions include splat-aware compression tools and rate-distortion-complexity optimization (including tracked vs. non-tracked temporal prediction); QoE evaluation for 6DoF navigation (metrics for view and temporal consistency and splat-specific artifacts); decoder and renderer co-design for real-time and mobile lightweight profiles (progressive and LOD-friendly layouts, GPU-friendly decode); and networked delivery problems such as adaptive streaming, ROI and view-dependent transmission, and loss resilience for splat parameters. Additional opportunities include interoperability work on reproducible benchmarking, conformance testing, and practical packaging and signaling for deployment.

MPEG Immersive Video 2nd edition (white paper)

The second edition of MPEG Immersive Video defines an interoperable bitstream and decoding process for efficient 6DoF immersive scene playback, supporting translational and rotational movement with motion parallax to reduce discomfort often associated with pure 3DoF viewing. The second edition primarily extends functionality (without changing the high-level bitstream structure), adding capabilities such as capture-device information, additional projection types, and support for Simple Multi-Plane Image (MPI), alongside tools that better support geometry and attribute handling and depth-related processing.

Architecturally, MIV ingests multiple (unordered) camera views with geometry (depth and occupancy) and attributes (e.g., texture), then reduces inter-view redundancy by extracting patches and packing them into 2D “atlases” that are compressed using conventional video codecs. MIV-specific metadata signals how to reconstruct views from the atlases. The standard is built as an extension of the common Visual Volumetric Video-based Coding (V3C) bitstream framework shared with V-PCC, with profiles that preserve backward compatibility while introducing a new profile for added second-edition functionality and a tailored profile for full-plane MPI delivery.

Research aspects: Key SIGMM topics include systems-efficient 6DoF delivery (better view and patch selection and atlas packing under latency and bandwidth constraints); rate-distortion-complexity-QoE optimization that accounts for decode and render cost (especially on HMD and mobile) and motion-parallax comfort; adaptive delivery strategies (representation ladders, viewport and pose-driven bit allocation, robust packetization and error resilience for atlas video plus metadata); renderer-aware metrics and subjective protocols for multi-view temporal consistency; and deployment-oriented work such as profile and level tuning, codec-group choices (HEVC / VVC), conformance testing, and exploiting second-edition features (capture device info, depth tools, Simple MPI) for more reliable reconstruction and improved user experience.

Concluding Remarks

The meeting outcomes highlight a clear shift toward immersive and AI-enabled media systems where compression, rendering, delivery, and evaluation must be co-designed. These developments offer timely opportunities for the ACM SIGMM community to contribute reproducible benchmarks, perceptual metrics, and end-to-end streaming and systems research that can directly influence emerging standards and deployments.

The 154th MPEG meeting will be held in Santa Eulària, Spain, from April 27 to May 1, 2026. Click here for more information about MPEG meetings and ongoing developments.