The original blog post can be found at the Bitmovin Techblog and has been modified/updated here to focus on and highlight research aspects.

The 134th MPEG meeting was once again held as an online meeting, and the official press release can be found here and comprises the following items:

- First International Standard on Neural Network Compression for Multimedia Applications

- Completion of the carriage of VVC and EVC

- Completion of the carriage of V3C in ISOBMFF

- Call for Proposals: (a) new Advanced Genomics Features and Technologies, (b) MPEG-I Immersive Audio, and (c) coded Representation of Haptics

- MPEG evaluated Responses on Incremental Compression of Neural Networks

- Progression of MPEG 3D Audio Standards

- The first milestone of development of Open Font Format (2nd amendment)

- Verification tests: (a) low Complexity Enhancement Video Coding (LCEVC) Verification Test and (b) more application cases of Versatile Video Coding (VVC)

- Standardization work on Version 2 of VVC and VSEI started

In this column, the focus is on streaming-related aspects including a brief update about MPEG-DASH.

First International Standard on Neural Network Compression for Multimedia Applications

Artificial neural networks have been adopted for a broad range of tasks in multimedia analysis and processing, such as visual and acoustic classification, extraction of multimedia descriptors, or image and video coding. The trained neural networks for these applications contain many parameters (i.e., weights), resulting in a considerable size. Thus, transferring them to several clients (e.g., mobile phones, smart cameras) benefits from a compressed representation of neural networks.

At the 134th MPEG meeting, MPEG Video ratified the first international standards on Neural Network Compression for Multimedia Applications (ISO/IEC 15938-17), designed as a toolbox of compression technologies. The specification contains different methods for

- parameter reduction (e.g., pruning, sparsification, matrix decomposition),

- parameter transformation (e.g., quantization), and

- entropy coding

methods that can be assembled to encoding pipelines combining one or more (in the case of reduction) methods from each group.

The results show that trained neural networks for many common multimedia problems such as image or audio classification or image compression can be compressed by a factor of 10-20 with no performance loss and even by more than 30 with performance trade-off. The specification is not limited to a particular neural network architecture and is independent of the neural network exchange format choice. The interoperability with common neural network exchange formats is described in the annexes of the standard.

As neural networks are becoming increasingly important, the communication thereof over heterogeneous networks to a plethora of devices raises various challenges including efficient compression that is inevitable and addressed in this standard. ISO/IEC 15938 is commonly referred to as MPEG-7 (or the “multimedia content description interface”) and this standard becomes now part 15 of MPEG-7.

Research aspects: Like for all compression-related standards, research aspects are related to compression efficiency (lossy/lossless), computational complexity (runtime, memory), and quality-related aspects. Furthermore, the compression of neural networks for multimedia applications probably enables new types of applications and services to be deployed in the (near) future. Finally, simultaneous delivery and consumption (i.e., streaming) of neural networks including incremental updates thereof will become a requirement for networked media applications and services.

Carriage of Media Assets

At the 134th MPEG meeting, MPEG Systems completed the carriage of various media assets in MPEG-2 Systems (Transport Stream) and the ISO Base Media File Format (ISOBMFF), respectively.

In particular, the standards for the carriage of Versatile Video Coding (VVC) and Essential Video Coding (EVC) over both MPEG-2 Transport Stream (M2TS) and ISO Base Media File Format (ISOBMFF) reached their final stages of standardization, respectively:

- For M2TS, the standard defines constraints to elementary streams of VVC and EVC to carry them in the packetized elementary stream (PES) packets. Additionally, buffer management mechanisms and transport system target decoder (T-STD) model extension are also defined.

- For ISOBMFF, the carriage of codec initialization information for VVC and EVC is defined in the standard. Additionally, it also defines samples and sub-samples reflecting the high-level bitstream structure and independently decodable units of both video codecs. For VVC, signaling and extraction of a certain operating point are also supported.

Finally, MPEG Systems completed the standard for the carriage of Visual Volumetric Video-based Coding (V3C) data using ISOBMFF. Therefore, it supports media comprising multiple independent component bitstreams and considers that only some portions of immersive media assets need to be rendered according to the users’ position and viewport. Thus, the metadata indicating the relationship between the region in the 3D spatial data to be rendered and its location in the bitstream is defined. In addition, the delivery of the ISOBMFF file containing a V3C content over DASH and MMT is also specified in this standard.

Research aspects: Carriage of VVC, EVC, and V3C using M2TS or ISOBMFF provides an essential building block within the so-called multimedia systems layer resulting in a plethora of research challenges as it typically offers an interoperable interface to the actual media assets. Thus, these standards enable efficient and flexible provisioning or/and use of these media assets that are deliberately not defined in these standards and subject to competition.

Call for Proposals and Verification Tests

At the 134th MPEG meeting, MPEG issued three Call for Proposals (CfPs) that are briefly highlighted in the following:

- Coded Representation of Haptics: Haptics provide an additional layer of entertainment and sensory immersion beyond audio and visual media. This CfP aims to specify a coded representation of haptics data, e.g., to be carried using ISO Base Media File Format (ISOBMFF) files in the context of MPEG-DASH or other MPEG-I standards.

- MPEG-I Immersive Audio: Immersive Audio will complement other parts of MPEG-I (i.e., Part 3, “Immersive Video” and Part 2, “Systems Support”) in order to provide a suite of standards that will support a Virtual Reality (VR) or an Augmented Reality (AR) presentation in which the user can navigate and interact with the environment using 6 degrees of freedom (6 DoF), that being spatial navigation (x, y, z) and user head orientation (yaw, pitch, roll).

- New Advanced Genomics Features and Technologies: This CfP aims to collect submissions of new technologies that can (i) provide improvements to the current compression, transport, and indexing capabilities of the ISO/IEC 23092 standards suite, particularly applied to data consisting of very long reads generated by 3rd generation sequencing devices, (ii) provide the support for representation and usage of graph genome references, (iii) include coding modes relying on machine learning processes, satisfying data access modalities required by machine learning and providing higher compression, and (iv) support of interfaces with existing standards for the interchange of clinical data.

Detailed information, including instructions on how to respond to the call for proposals, the requirements that must be considered, the test data to be used, and the submission and evaluation procedures for proponents are available at www.mpeg.org.

Call for proposals typically mark the beginning of the formal standardization work whereas verification tests are conducted once a standard has been completed. At the 134th MPEG meeting and despite the difficulties caused by the pandemic situation, MPEG completed verification tests for Versatile Video Coding (VVC) and Low Complexity Enhancement Video Coding (LCEVC).

For LCEVC, verification tests measured the benefits of enhancing four existing codecs of different generations (i.e., AVC, HEVC, EVC, VVC) using tools as defined in LCEVC within two sets of tests:

- The first set of tests compared LCEVC-enhanced encoding with full-resolution single-layer anchors. The average bit rate savings produced by LCEVC when enhancing AVC were determined to be approximately 46% for UHD and 28% for HD. When enhancing HEVC approximately 31% for UHD and 24% for HD. Test results tend to indicate an overall benefit also when using LCEVC to enhance EVC and VVC.

- The second set of tests confirmed that LCEVC provided a more efficient means of resolution enhancement of half-resolution anchors than unguided up-sampling. Comparing LCEVC full-resolution encoding with the up-sampled half-resolution anchors, the average bit-rate savings when using LCEVC with AVC, HEVC, EVC and VVC were calculated to be approximately 28%, 34%, 38%, and 32% for UHD and 27%, 26%, 21%, and 21% for HD, respectively.

For VVC, it was already the second round of verification testing including the following aspects:

- 360-degree video for equirectangular and cubemap formats, where VVC shows on average more than 50% bit rate reduction compared to the previous major generation of MPEG video coding standard known as High Efficiency Video Coding (HEVC), developed in 2013.

- Low-delay applications such as compression of conversational (teleconferencing) and gaming content, where the compression benefit is about 40% on average,

- HD video streaming, with an average bit rate reduction of close to 50%.

A previous set of tests for 4K UHD content completed in October 2020 had shown similar gains. These verification tests used formal subjective visual quality assessment testing with “naïve” human viewers. The tests were performed under a strict hygienic regime in two test laboratories to ensure safe conditions for the viewers and test managers.

Research aspects: CfPs offer a unique possibility for researchers to propose research results for adoption into future standards. Verification tests provide objective or/and subjective evaluations of standardized tools which typically conclude the life cycle of a standard. The results of the verification tests are usually publicly available and can be used as a baseline for future improvements of the respective standards including the evaluation thereof.

DASH Update!

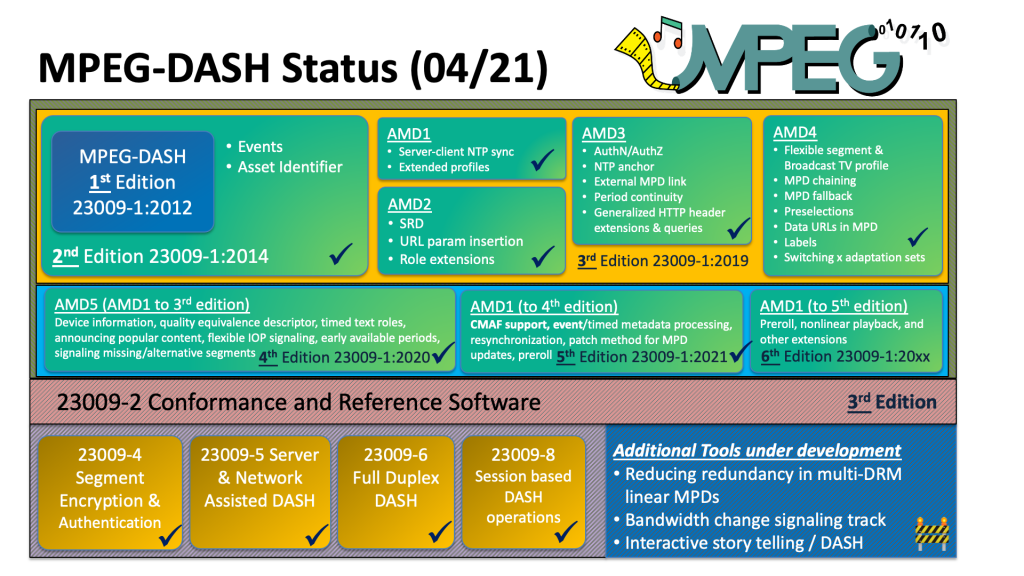

Finally, I’d like to provide a brief update on MPEG-DASH! At the 134th MPEG meeting, MPEG Systems recommended the approval of ISO/IEC FDIS 23009-1 5th edition. That is, the MPEG-DASH core specification will be available as 5th edition sometime this year. Additionally, MPEG requests that this specification becomes freely available which also marks an important milestone in the development of the MPEG-DASH standard. Most importantly, the 5th edition of this standard incorporates CMAF support as well as other enhancements defined in the amendment of the previous edition. Additionally, the MPEG-DASH subgroup of MPEG Systems is already working on the first amendment to its 5th edition entitled preroll, nonlinear playback, and other extensions. It is expected that the 5th edition will also impact related specifications within MPEG but also in other Standards Developing Organizations (SDOs) such as DASH-IF, i.e., defining interoperability points (IOPs) for various codecs and others, or CTA WAVE (Web Application Video Ecosystem), i.e., defining device playback capabilities such as the Common Media Client Data (CMCD). Both DASH-IF and CTA WAVE provide means for (conformance) test infrastructure for DASH and CMAF.

An updated overview of DASH standards/features can be found in the Figure below.

Research aspects: MPEG-DASH has been ratified almost ten years ago which resulted in a plethora of research articles, mostly related to adaptive bitrate (ABR) algorithms and their impact on the streaming performance including the Quality of Experience (QoE). An overview of bitrate adaptation schemes is provided here including a list of open challenges and issues.

The 135th MPEG meeting will be again an online meeting in July 2021. Click here for more information about MPEG meetings and their developments.