The original blog post can be found at the Bitmovin Techblog and has been updated here to focus on and highlight research aspects.

The 117th MPEG meeting was held in Geneva, Switzerland and its press release highlights the following aspects:

- MPEG issues Committee Draft of the Omnidirectional Media Application Format (OMAF)

- MPEG-H 3D Audio Verification Test Report

- MPEG Workshop on 5-Year Roadmap Successfully Held in Geneva

- Call for Proposals (CfP) for Point Cloud Compression (PCC)

- Preliminary Call for Evidence on video compression with capability beyond HEVC

- MPEG issues Committee Draft of the Media Orchestration (MORE) Standard

- Technical Report on HDR/WCG Video Coding

In this article, I’d like to focus on the topics related to multimedia communication starting with OMAF.

Omnidirectional Media Application Format (OMAF)

Real-time entertainment services deployed over the open, unmanaged Internet – streaming audio and video – account now for more than 70% of the evening traffic in North American fixed access networks and it is assumed that this figure will reach 80 percent by 2020. More and more such bandwidth hungry applications and services are pushing onto the market including immersive media services such as virtual reality and, specifically 360-degree videos. However, the lack of appropriate standards and, consequently, reduced interoperability is becoming an issue. Thus, MPEG has started a project referred to as Omnidirectional Media Application Format (OMAF). The first milestone of this standard has been reached and the committee draft (CD) has been approved at the 117th MPEG meeting. Such application formats “are essentially superformats that combine selected technology components from MPEG (and other) standards to provide greater application interoperability, which helps satisfy users’ growing need for better-integrated multimedia solutions” [MPEG-A].” In the context of OMAF, the following aspects are defined:

- Equirectangular projection format (note: others might be added in the future)

- Metadata for interoperable rendering of 360-degree monoscopic and stereoscopic audio-visual data

- Storage format: ISO base media file format (ISOBMFF)

- Codecs: High Efficiency Video Coding (HEVC) and MPEG-H 3D audio

OMAF is the first specification which is defined as part of a bigger project currently referred to as ISO/IEC 23090 — Immersive Media (Coded Representation of Immersive Media). It currently has the acronym MPEG-I and we have previously used MPEG-VR which is now replaced by MPEG-I (that still might chance in the future). It is expected that the standard will become Final Draft International Standard (FDIS) by Q4 of 2017. Interestingly, it does not include AVC and AAC, probably the most obvious candidates for video and audio codecs which have been massively deployed in the last decade and probably still will be a major dominator (and also denominator) in upcoming years. On the other hand, the equirectangular projection format is currently the only one defined as it is broadly used already in off-the-shelf hardware/software solutions for the creation of omnidirectional/360-degree videos. Finally, the metadata formats enabling the rendering of 360-degree monoscopic and stereoscopic video is highly appreciated. A solution for MPEG-DASH based on AVC/AAC utilizing equirectangular projection format for both monoscopic and stereoscopic video is shown as part of Bitmovin’s solution for VR and 360-degree video.

Research aspects related to OMAF can be summarized as follows:

- HEVC supports tiles which allow for efficient streaming of omnidirectional video but HEVC is not as widely deployed as AVC. Thus, it would be interesting how to mimic such a tile-based streaming approach utilizing AVC.

- The question how to efficiently encode and package HEVC tile-based video is an open issue and call for a tradeoff between tile flexibility and coding efficiency.

- When combined with MPEG-DASH (or similar), there’s a need to update the adaptation logic as the with tiles yet another dimension is added that needs to be considered in order to provide a good Quality of Experience (QoE).

- QoE is a big issue here and not well covered in the literature. Various aspects are worth to be investigated including a comprehensive dataset to enable reproducibility of research results in this domain. Finally, as omnidirectional video allows for interactivity, also the user experience is becoming an issue which needs to be covered within the research community.

A second topic I’d like to highlight in this blog post is related to the preliminary call for evidence on video compression with capability beyond HEVC.

Preliminary Call for Evidence on video compression with capability beyond HEVC

A call for evidence is issued to see whether sufficient technological potential exists to start a more rigid phase of standardization. Currently, MPEG together with VCEG have developed a Joint Exploration Model (JEM) algorithm that is already known to provide bit rate reductions in the range of 20-30% for relevant test cases, as well as subjective quality benefits. The goal of this new standard — with a preliminary target date for completion around late 2020 — is to develop technology providing better compression capability than the existing standard, not only for conventional video material but also for other domains such as HDR/WCG or VR/360-degrees video. An important aspect in this area is certainly over-the-top video delivery (like with MPEG-DASH) which includes features such as scalability and Quality of Experience (QoE). Scalable video coding has been added to video coding standards since MPEG-2 but never reached wide-spread adoption. That might change in case it becomes a prime-time feature of a new video codec as scalable video coding clearly shows benefits when doing dynamic adaptive streaming over HTTP. QoE did find its way already into video coding, at least when it comes to evaluating the results where subjective tests are now an integral part of every new video codec developed by MPEG (in addition to usual PSNR measurements). Therefore, the most interesting research topics from a multimedia communication point of view would be to optimize the DASH-like delivery of such new codecs with respect to scalability and QoE. Note that if you don’t like scalable video coding, feel free to propose something else as long as it reduces storage and networking costs significantly.

MPEG Workshop “Global Media Technology Standards for an Immersive Age”

MPEG Workshop “Global Media Technology Standards for an Immersive Age”

On January 18, 2017 MPEG successfully held a public workshop on “Global Media Technology Standards for an Immersive Age” hosting a series of keynotes from Bitmovin, DVB, Orange, Sky Italia, and Technicolor. Stefan Lederer, CEO of Bitmovin discussed today’s and future challenges with new forms of content like 360°, AR and VR. All slides are available here and MPEG took their feedback into consideration in an update of its 5-year standardization roadmap. David Wood (EBU) reported on the DVB VR study mission and Ralf Schaefer (Technicolor) presented a snapshot on VR services. Gilles Teniou (Orange) discussed video formats for VR pointing out a new opportunity to increase the content value but also raising a question what is missing today. Finally, Massimo Bertolotti (Sky Italia) introduced his view on the immersive media experience age.

Overall, the workshop was well attended and as mentioned above, MPEG is currently working on a new standards project related to immersive media. Currently, this project comprises five parts. The first part comprises a technical report describing the scope (incl. kind of system architecture), use cases, and applications. The second part is OMAF (see above) and the third/forth parts are related to immersive video and audio respectively. Part five is about point cloud compression.

For those interested, please check out the slides from industry representatives in this field and draw your own conclusions what could be interesting for your own research. I’m happy to see any reactions, hints, etc. in the comments.

Finally, let’s have a look what happened related to MPEG-DASH, a topic with a long history on this blog.

MPEG-DASH and CMAF: Friend or Foe?

For MPEG-DASH and CMAF it was a meeting “in between” official standardization stages. MPEG-DASH experts are still working on the third edition which will be a consolidated version of the 2nd edition and various amendments and corrigenda. In the meantime, MPEG issues a white paper on the new features of MPEG-DASH which I would like to highlight here.

- Spatial Relationship Description (SRD): allows to describe tiles and region of interests for partial delivery of media presentations. This is highly related to OMAF and VR/360-degree video streaming.

- External MPD linking: this feature allows to describe the relationship between a single program/channel and a preview mosaic channel having all channels at once within the MPD.

- Period continuity: simple signaling mechanism to indicate whether one period is a continuation of the previous one which is relevant for ad-insertion or live programs.

- MPD chaining: allows for chaining two or more MPDs to each other, e.g., pre-roll ad when joining a live program.

- Flexible segment format for broadcast TV: separates the signaling of the switching points and random access points in each stream and, thus, the content can be encoded with a good compression efficiency, yet allowing higher number of random access point, but with lower frequency of switching points.

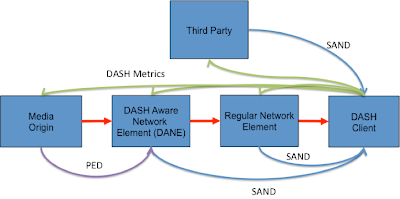

- Server and network-assisted DASH (SAND): enables asynchronous network-to-client and network-to-network communication of quality-related assisting information.

- DASH with server push and WebSockets: basically addresses issues related to HTTP/2 push feature and WebSocket.

CMAF issued a study document which captures the current progress and all national bodies are encouraged to take this into account when commenting on the Committee Draft (CD). To answer the question in the headline above, it looks more and more like as DASH and CMAF will become friends — let’s hope that the friendship lasts for a long time.

What else happened at the MPEG meeting?

- Committee Draft MORE (note: type in ‘man more’ on any unix/linux/max terminal and you’ll get ‘less – opposite of more’;): MORE stands for “Media Orchestration” and provides a specification that enables the automated combination of multiple media sources (cameras, microphones) into a coherent multimedia experience. Additionally, it targets use cases where a multimedia experience is rendered on multiple devices simultaneously, again giving a consistent and coherent experience.

- Technical Report on HDR/WCG Video Coding: This technical report comprises conversion and coding practices for High Dynamic Range (HDR) and Wide Colour Gamut (WCG) video coding (ISO/IEC 23008-14). The purpose of this document is to provide a set of publicly referenceable recommended guidelines for the operation of AVC or HEVC systems adapted for compressing HDR/WCG video for consumer distribution applications

- CfP Point Cloud Compression (PCC): This call solicits technologies for the coding of 3D point clouds with associated attributes such as color and material properties. It will be part of the immersive media project introduced above.

- MPEG-H 3D Audio verification test report: This report presents results of four subjective listening tests that assessed the performance of the Low Complexity Profile of MPEG-H 3D Audio. The tests covered a range of bit rates and a range of “immersive audio” use cases (i.e., from 22.2 down to 2.0 channel presentations). Seven test sites participated in the tests with a total of 288 listeners.

The next MPEG meeting will be held in Hobart, April 3-7, 2017. Feel free to contact us for any questions or comments.